Project Page

Paper

Poster

Code

Abstract

Bibtex

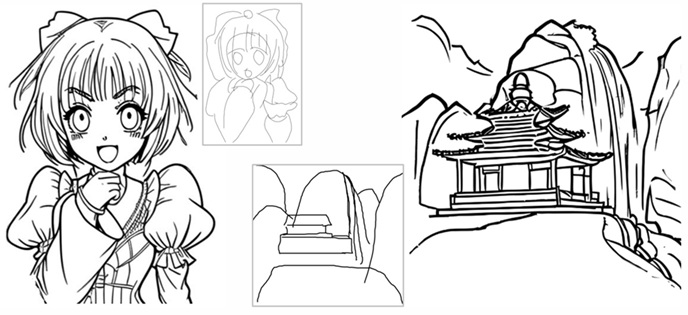

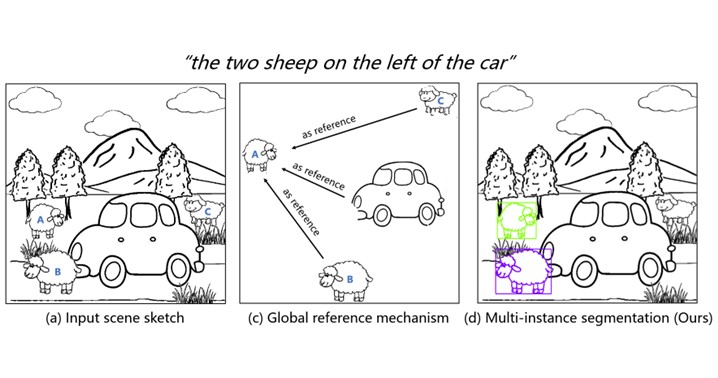

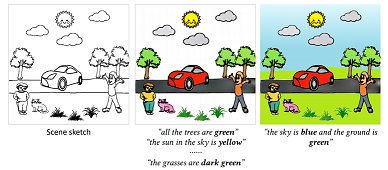

We contribute the first large-scale dataset of scene

sketches, SketchyScene, with the goal of advancing research on sketch understanding at both the object

and scene level. The dataset is created through a novel and carefully designed crowdsourcing pipeline,

enabling users to efficiently generate large quantities realistic and diverse scene sketches.

SketchyScene contains more than 29,000 scene-level sketches, 7,000+ pairs of scene templates and photos,

and 11,000+ object sketches. All objects in the scene sketches have ground-truth semantic and instance

masks. The dataset is also highly scalable and extensible, easily allowing augmenting and/or changing

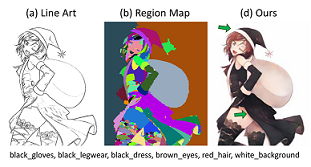

scene composition. We demonstrate the potential impact of SketchyScene by training new computational

models for semantic segmentation of scene sketches and showing how the new dataset enables several

applications including image retrieval, sketch colorization, editing, and captioning, etc.

@inproceedings{zou2018sketchyscene,

title={Sketchyscene: Richly-annotated scene sketches},

author={Zou, Changqing and Yu, Qian and Du, Ruofei and Mo, Haoran and Song, Yi-Zhe and Xiang, Tao and Gao, Chengying and Chen, Baoquan and Zhang, Hao},

booktitle={Proceedings of the european conference on computer vision (ECCV)},

pages={421--436},

year={2018}

}